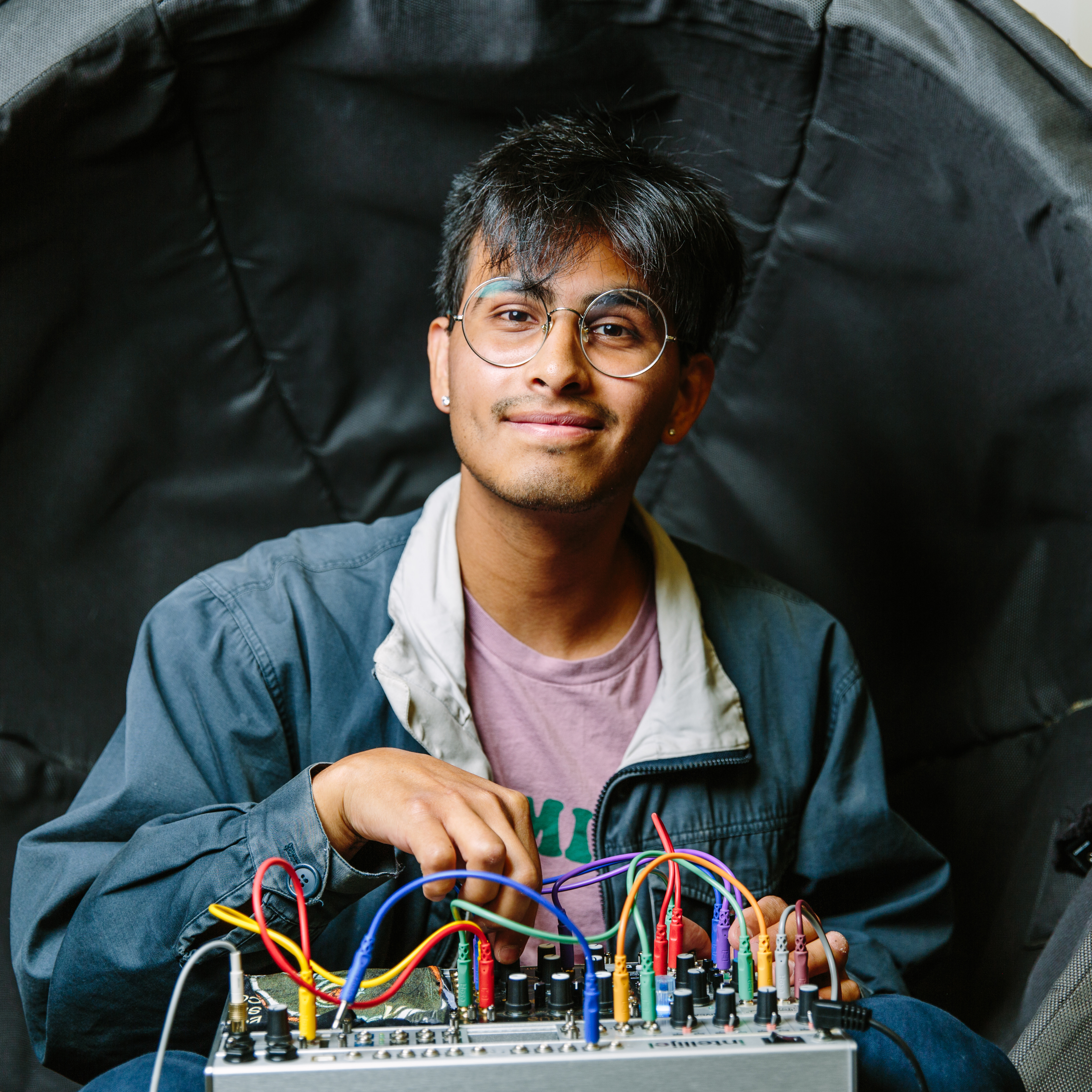

Hugo Flores García

AI and the Art of Sound

AI and the Art of Sound

Hugo Flores García, a PhD student working in the Interactive Audio Lab led by Professor Bryan Pardo, focuses his research at the intersection of applied machine learning, music, sound art, and human-computer interaction.

His research focuses on designing new musical instruments for creative expression, building artist-centered machine learning systems for practicing musicians and sound artists.

Hugo defended his dissertation in July, 2025.

Can you give an overview of your dissertation?

My dissertation critiques large commercial AI music endeavors that claim they can make a "democratized" musical instrument -- a system they claim can be used by anyone to make music, regardless of their musical background or level of expertise. I believe setting out to build a universal musical instrument is an ill-formed goal: music is not a homogeneous blob, but an umbrella term encompassing countless evolving communities of artistic practice, each with a unique set of styles, techniques, and aesthetic values. While a more casual listener values things like the "genre" or "mood" of a song, I (as a practicing instrumentalist) value things like melodic/harmonic contour, rhythm, dynamics, and overall gesture.

Following these large commercial AI music efforts, most state-of-the-art research in generative audio modeling relies on text-prompting as a primary form of interaction with users.

Text prompting is a very limited control mechanism for making sounds: a sound is worth more than a thousand words. Sonic structures like a syncopated rhythm or the timbre of an evolving sound are hard to describe in text. They can be more easily described through a sonic gesture, like a vocal imitation.

In my dissertation, I contribute two technical research works that explore more controllable, gestural, interactive, and artist-centered alternatives to text-prompted generative models. The two technical works are VampNet and Sketch2Sound**. Instead of prompting via text, both of these approaches let you create sounds using other sounds as a guide (like a vocal imitation of you going woosh!!!). Highly recommend you check out the demo video for Sketch2Sound here!

My dissertation also introduces the neural tape loop: a co-creative generative musical meta-instrument* for experimental music and sound art designed and developed using practice-based research methods. I propose new ways to make sounds based on the inherent properties of masked acoustic token models, and illustrate the musical capabilities of these techniques through four original creative works (a composed improvisation, two fixed media electroacoustic pieces, and a multichannel interactive sound installation) made in collaboration with sound artists, composers, and instrumentalists.

Finally, I reflect on how engaging in a mixed creative and technical research practice can be a catalyst for culturally situated and artist-centered innovation and advancement in generative musical instrument design.

* a meta-instrument is a musical instrument from which we can build other musical instruments!

** it should be worth noting that Sketch2Sound has been patented and released by Adobe as part of their Firefly suite of tools. You can try it here.

You can find press related to Sketch2Sound below:

- Adobe’s new AI tool turns silly noises into realistic audio effects

- Adobe Firefly Can Now Create Sound Effects With Your Voice and Adds More Advanced Video Capabilities

- Adobe’s Project Super Sonic uses AI to generate sound effects for your videos

Starting the end of September, I'll be joining Adobe Research as a Research Scientist for the SODA (SOund Design Ai) group, where I will keep building artist-centered generative instruments that preserve the gestural, expressive and performative nature of Foley sound and sound design.